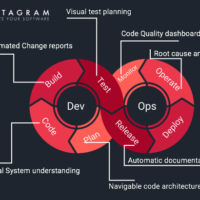

In this blog I will share some thoughts related to dangers of large scale software projects.

I will write several articles in with the title.

One of the most common problems in big projects is that some code files start causing more and more troubles while the rest remain clean. Usually it is about

Blob antipattern

But why that happens? Sometimes large code files are readable, but they are not maintainable. Why the problems start accumulating?

Factors and triggers to make it happen

- code complexity is too high

- bad readability

- complex domain knowledge

- too much overlapping functionality

- copy paste code

These lead to lower work morale, and the new changes start to be tweaks and oneliners that usually do not improve the situation, but generate new regression bugs into the software.

When the several of above factors are present: the damage starts to be huge. Developers do not have time to patience to check the relevant other places in the same file and make the change with “quick fix” mentality without enough understanding. Big file size, readability and unneeded complexity are demotivating developers even if they are well experienced.

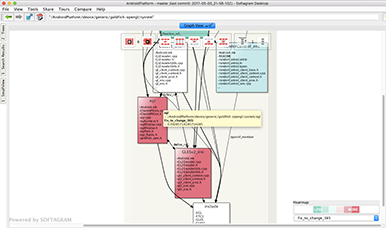

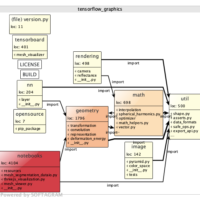

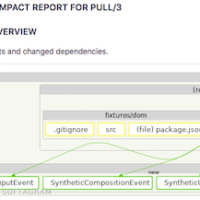

Fix to change ratio

So, instead of concentrating on a simple metric such as LOC or complexity, I would rather use the history data to understand how often the code gets broken in those large files.

So, as long as

fix to change ratio stays low for the larger files, the situation is tolerable. Next part in the series will be about dependency metrics.

– Ville

Would you like to which are your softwares worst components? Analyse it free in Softagram Cloud!